Mappers and Reducers are the Hadoop servers that run the Map and Reduce functions respectively. While the map is a mandatory step to filter and sort the initial data, the reduce function is optional. All inputs and outputs are stored in the HDFS. The types of keys and values differ based on the use case. The Reduce function also takes inputs as pairs, and produces pairs as output.The Map function takes input from the disk as pairs, processes them, and produces another set of intermediate pairs as output.How MapReduce WorksĪt the crux of MapReduce are two functions: Map and Reduce. That's because MapReduce has unique advantages. However, these usually run along with jobs that are written using the MapReduce model. Today, there are other query-based systems such as Hive and Pig that are used to retrieve data from the HDFS using SQL-like statements. MapReduce was once the only method through which the data stored in the HDFS could be retrieved, but that is no longer the case. Data access and storage is disk-based-the input is usually stored as files containing structured, semi-structured, or unstructured data, and the output is also stored in files. With MapReduce, rather than sending data to where the application or logic resides, the logic is executed on the server where the data already resides, to expedite processing. This reduces the processing time as compared to sequential processing of such a large data set. In the end, it aggregates all the data from multiple servers to return a consolidated output back to the application.įor example, a Hadoop cluster with 20,000 inexpensive commodity servers and 256MB block of data in each, can process around 5TB of data at the same time. MapReduce facilitates concurrent processing by splitting petabytes of data into smaller chunks, and processing them in parallel on Hadoop commodity servers. It is a core component, integral to the functioning of the Hadoop framework. MapReduce is a programming model or pattern within the Hadoop framework that is used to access big data stored in the Hadoop File System (HDFS). Initially used by Google for analyzing its search results, MapReduce gained massive popularity due to its ability to split and process terabytes of data in parallel, achieving quicker results. This is where the MapReduce programming model comes to rescue.

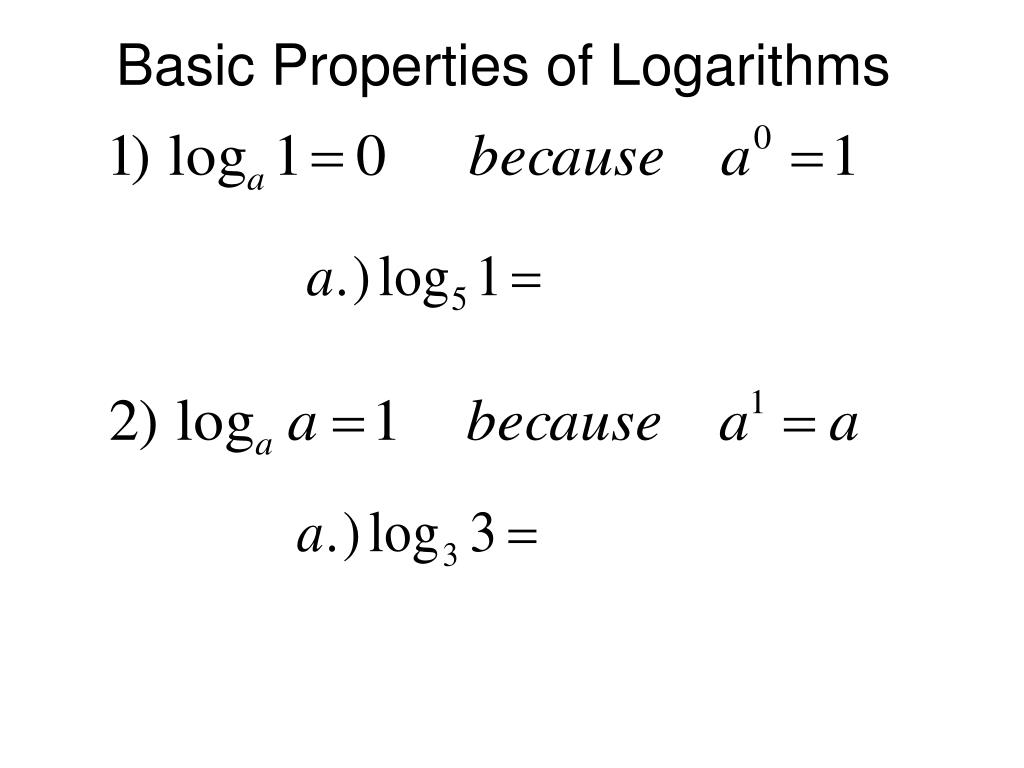

#Using properties to combine and condense logarithms how to#

The challenge, though, is how to process this massive amount of data with speed and efficiency, and without sacrificing meaningful insights.

In today's data-driven market, algorithms and applications are collecting data 24/7 about people, processes, systems, and organizations, resulting in huge volumes of data. Big Data and Privacy: What Companies Need to Know.Big Data and Agriculture: A Complete Guide.Stitch Fully-managed data pipeline for analytics.

0 kommentar(er)

0 kommentar(er)